To answer this question, I will explain two main points: 1. the reason why we must regulate AI and 2. comparison of existing regulations about AI between EU and Japan.

- The reason why we must regulate AI:

・Wide range of applications surrounding us: web search engines, recommendation systems, self-driving cars. AI is too important to be left to AI developers.

・Having a lot of fatal flaws: matters of transparency (black box), biases, risk of human autonomy, risk of privacy, risk of safety, etc. It needs to have research on AI

2. Compare legal framework of Japan and EU:

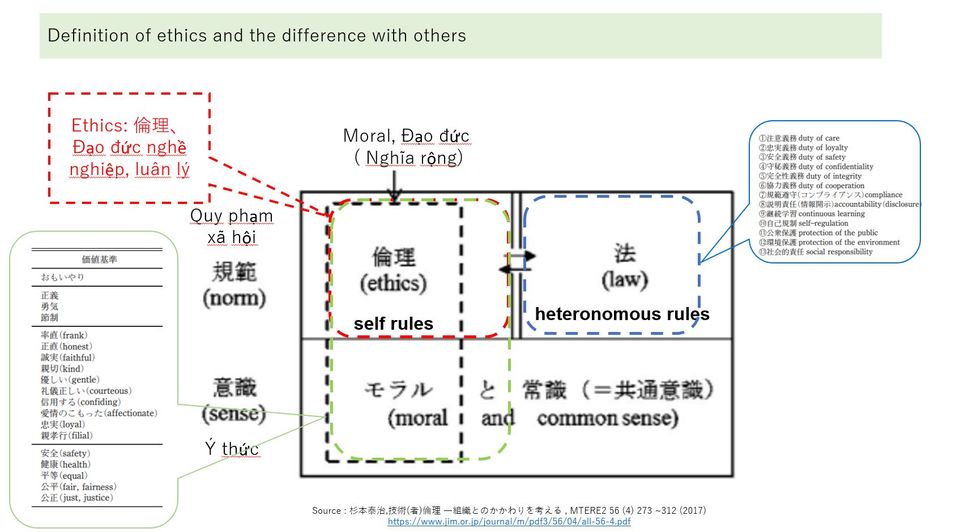

It is difficult for a comprehensive legal framework to the whole world. Legal systems should follow principles at the highest level. To achieve these principles, countries have binding & non-binding regulations. While the EU issues both "Soft Law" and "Hard Law" to regulate, Japan currently emphasizes "Soft Law" only.

In Soft law, emphasizing human rights, the EU has been far detailed and informative than Japan's.

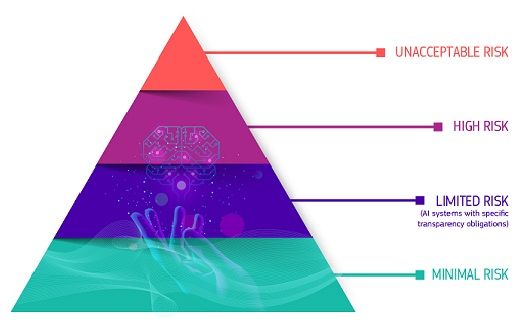

Hard Law of AI ( AI Act), based on a "Risk-Based Approach," imposes strict liability to AI developers. About Hard LAw, the EU announced its first draft of AI Law in the world. Japan's response to AI Act: "unnecessary for the legally-binding law at the moment."

3. How should government regulate AI?

Should Japan and other countries follow the EU, regulate AI by binding law? Hard law may intervene, delay the development of technology, but it will limit the opposing sides of AI. In my opinion, AI is too important to be left to private sectors, especially big tech firms. It undeniably needs to obviate AI's risk.

However, is Hard Law too strict? Are there any other methods of AI governance?

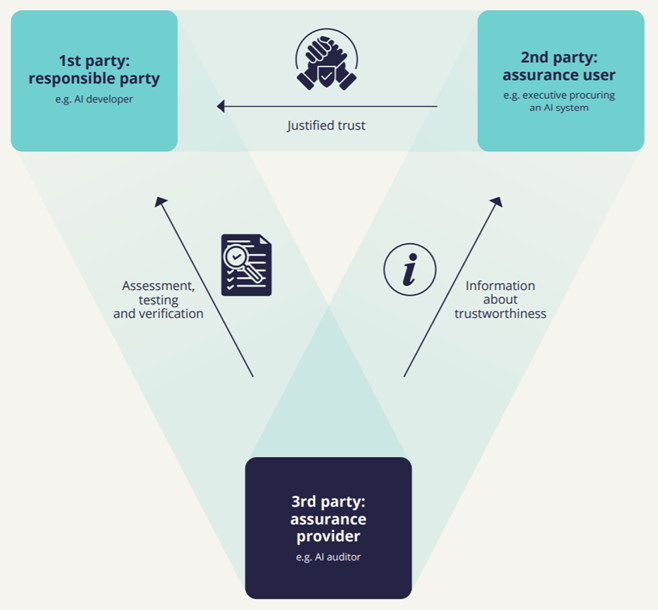

AI Assurance (UK, December 2021) may be a good governance model considered.

Regardless of forms of regulation, governments should establish a mechanism of an independent third party to check & verify these algorithms.